The shape of assessment

Back

This blog was originally published on David Didau's Learning Spy blog here.

As we should all now be aware, there are no external audiences interested in schools’ internal data. If we’re going to go to the trouble of getting students to sit formal assessments on which we will collect data, we should be very clear about the purpose both of the assessments and the data they produce.

On the whole, the purpose of assessment data appears to be discriminating between students. The purpose of GCSEs, SATs, A levels and other national exams is to discriminate between students – to determine each individual’s performance into a normally distributed rank order and then assign grades at different cut off points – so that we know who is ‘good’ and ‘bad’ at different subjects. Whatever you think of this as a system of summative assessment, and there are some reasonable arguments in support of it, there’s absolutely no necessity for schools to replicate the process internally.

Previously, I’ve argued that the purpose of formal internal assessments should be to twofold. First, they should seek to provide periodic statements of competence, that students have mastered curriculum content sufficiently well to progress onwards, and second, as a mechanism for assuring the quality of the curriculum and how well it’s taught.

If we are using the curriculum as our progression model all we need to know is, how well students have learned this aspect of the curriculum. Whilst the purpose of a GCSE exam is to say how well students have performed relative to one another, the purpose of a test attempting to assess how much of the curriculum has been learned should not be interested in discriminating between students. Ideally, if the curriculum is perfectly specified and taught, all students would get close to 100%. Clearly, we’ll never come near this state of perfection so if we achieve average scores of 90% and above, we should be well satisfied that we are specifying and teaching incredibly well.

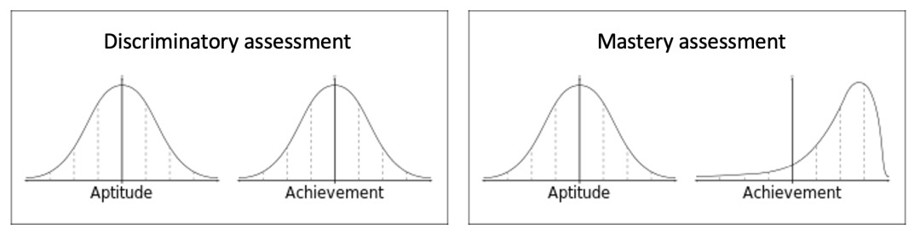

This approach to assessment – let’s call it ‘mastery assessment’ – would be expected to provide a very different ‘shape’ to traditional, discriminatory assessment.

When assessment is designed to discriminate between students it will, given a normal distribution of students’ aptitude, produce a normally distributed bell curve, but an assessment designed to allow students to demonstrate mastery of the curriculum should produce something that looks a bit more like a slope.

A discriminatory assessment is designed to produce winners and losers; to celebrate some students as ‘able’ and some as ‘less able’. While we might tell ourselves that the purpose of such an assessment is to find our who needs additional support, in most cases this should be clear to teachers well in advance of formal assessments. We might also believe that discriminatory assessments may be motivating. It’s certainly true that those who find themselves near the top of the distribution may well enjoy competing against each other – and it’s equally true that there might be fierce competition to avoid being at the bottom, but for most students will, by necessity, learn that they are distinctly average. Doing poorly in this style of assessments is likely to create a self-fulfilling prophesy in which students learn early on that they’re ‘rubbish’ at certain (perhaps all) subjects. What’s motivating about that?

With mastery style assessment, if students fail to meet a minimum threshold, our default assumption should be that there is a fault either with the design of the curriculum or in its teaching. The fault should be acknowledged as ours, rather than something to be blamed on students. If our guiding assumption was that any test score below, say, 80% highlighted some fault in the curriculum or instruction, this could transform the educational experiences of our most disadvantaged students. Instead of seeing tests as demoralising pass/fail cliff-edges, they might come to see that they provide useful benchmarks of progress to strive towards.

To move towards mastery style assessment, I think we need to be clear on the following principles:

- Never assess students on something that hasn’t been explicitly taught.

- Any areas of the curriculum that can be described as ‘skills’ must be broken down into teachable components of knowledge which can be learned and practised.

- Until the curriculum has fully sequenced the teaching of these skills, test items should be as granular as possible to allow all students to be successful.

- If students struggle to answer test items, we should assume the fault is with the curriculum (students across multiple classes struggle) or with instruction (students in a particular class struggle),

- Not only do we need teaching to be responsive to students’ needs, we also need to think in terms of responsive curriculum.

David Didau is a former teacher, now a freelance education writer, speaker, and trainer.